Research

Fundamental 3D Perception Research

We develop novel signal processing, sensor fusion techniques and optimization techniques for 3D localization and mapping. Our topics of interest include:

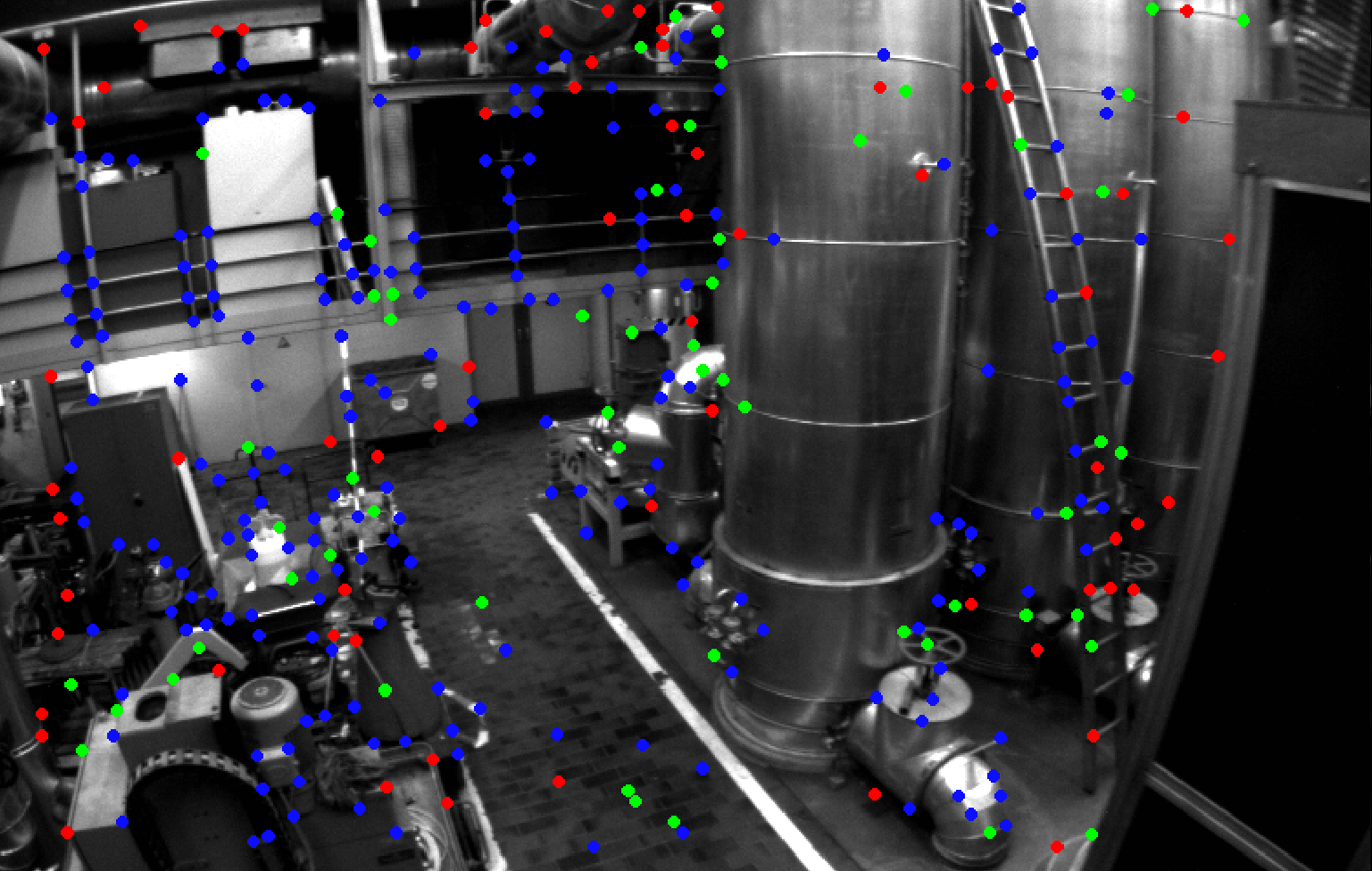

Acquisition: geometry, calibration, time synchronization, infrared imaging, multi-spectral imaging

Analysis: feature detection and description, shape recognition, segmentation, motion estimation and tracking

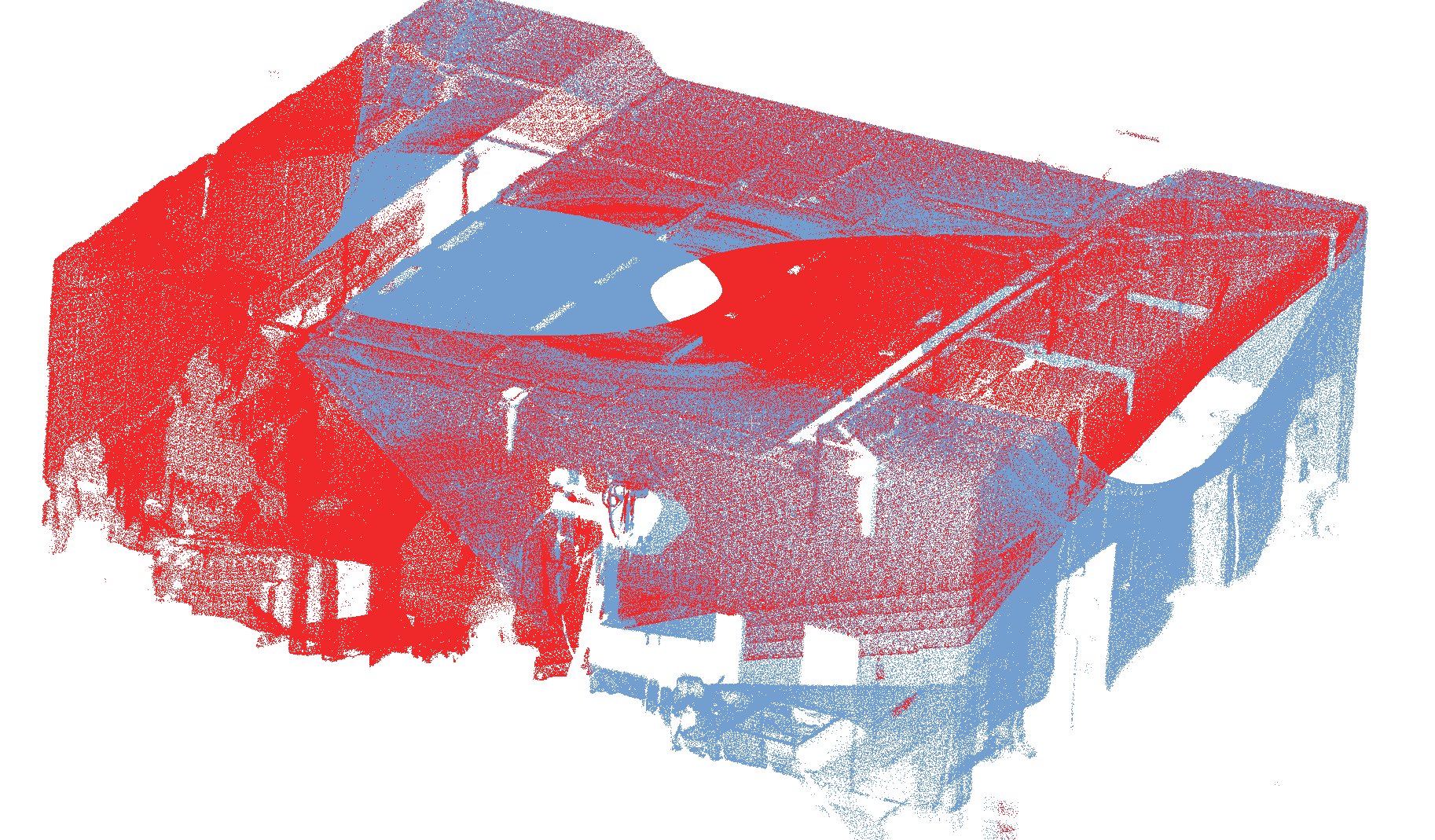

Sensor fusion: multi-modal data integration, state estimation, filtering, non-linear optimization, continuous-time optimization

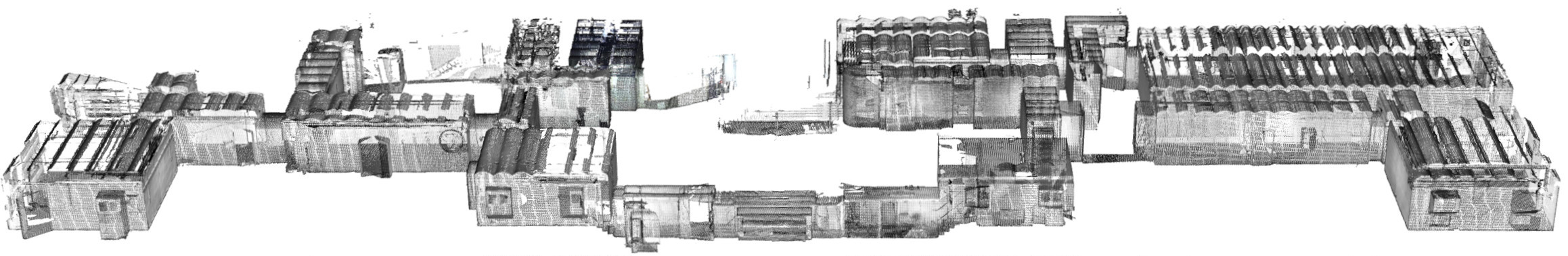

Reconstruction: structure from motion, multi-view stereo, dense mapping, simultaneous localization and mapping

Rendering: 3D data visualization, neural rendering, synthetic data generation

Some example developments include:

3D Perception for Downstream Applications

Collaborating with other departments and research units, we deliver state-of-the-art 3D perception systems used as a component in other systems for applications such as:

- Autonomous systems: robotics, drones, self-driving vehicles, path planning

- Augmented and virtual reality: AR/VR environments, spatial tracking, human-computer interaction, immersive rendering

- Demining: mine or threat detection and classification

- Geospatial mapping: 3D mapping for situational awareness, digital twins

Some example developments include: